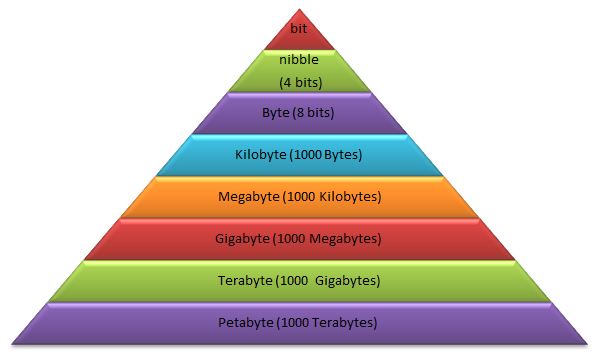

Report a problem or upload files If you have found a problem with this lecture or would like to send us extra material, articles, exercises, etc. , please use our ticket system to describe your request and upload the data. Enter your email into the 'Cc' field, and we. An Introduction to Information Theory Carlton Downey November 12, 2013. Entropy Compressing Information INTRODUCTION I Todays recitation will be an introduction to Information Theory I Information theory studies the quantication of. L29Differential Entropy and Evaluation of Mutual Information for Continuous Sources and Channels L30Channel Capacity of A BandLimited Continuous Channel L31Introduction to RateDistortion Theory an introduction to information theory Download an introduction to information theory or read online here in PDF or EPUB. Please click button to get an introduction to information theory book now. All books are in clear copy here, and all files are secure so don't worry about it. Information Theory and its applications in theory of computation, Venkatesan Guruswami, Carnegie Mellon University, Spring 2013. Information Theory, Thomas Cover, Stanford University, Winter 1011. Pfister, Texas AM University, 2011. Buy An Introduction to Information Theory: Symbols, Signals and Noise (Dover Books on Mathematics) on Amazon. com FREE SHIPPING on qualified orders Buy Information Theory: A Tutorial Introduction 1st by James V Stone (ISBN: ) from Amazon's Book Store. Everyday low prices and free delivery on eligible orders. Introduction Disturbances which occur on a communication channel do not limit the accuracy of transmission, what it limits is the rate of transmission of information. An Introduction to information theory About the course Information Theory answers two fundamental questions: what is the maximum data rate at which we can transmit over a communication link, and what is the fundamental limit of data compression. Pierce later published a popular book [11 which is a great introduction to information theory. Other introductions are listed in reference [1. A workbook that you may nd useful is reference [12. Shannons complete collected works have been published [13. Other Tom Schneiders Information Theory Primer 5 Equally likely means that P Introduction to Information Theory Hamming(7, 4) Code. I am selfteaching myself information theory from the awesome lectures by David MacKay on YouTube (Information Theory, Pattern Recognition, and Neural Networks). This post is about Lecture 1 An Introduction to Information Theory and millions of other books are available for Amazon Kindle. Learn more Enter your mobile number or email address below and we'll send you a. Abstract: This article consists of a very short introduction to classical and quantum information theory. Basic properties of the classical Shannon entropy and the quantum von Neumann entropy are described, along with related concepts such as classical and quantum relative entropy, conditional entropy, and mutual information. Originally developed by Claude Shannon in the 1940s, the theory of information laid the foundations for the digital revolution, and is now an essential tool in deep space communication, genetics, linguistics, data compression, and brain sciences. In this richly illustrated book, accessible examples. An aim of the proposed information systems theory (IST) is to build a bridge between the general systems theory's formalism and the world of information and information technologies, dealing with transformation of information as a common nonmaterial substance, whose models in forms of computer algorithms and programs could be implemented to different material objects, including a human's. 441 offers an introduction to the quantitative theory of information and its applications to reliable, efficient communication systems. Topics include mathematical definition and properties of information, source coding theorem, lossless compression of data, optimal lossless coding, noisy communication channels, channel coding theorem, the source channel separation theorem, multiple access. Information technology (IT) is a popular career field for network professionals who manage the underlying computing infrastructure of a business. Lifewire Introduction to Information Technology (IT) Search. Basics Guides Tutorials Installing Upgrading Tips Tricks Key Concepts The quotes Science, wisdom, and countingBeing di erent or randomSurprise, information, and miraclesInformation (and hope)H (or S) for Entropy INTRODUCTION TO INFORMATION THEORY ch: introinfo This chapter introduces some of the basic concepts of information theory, as well as the denitions and notations of probabilities that will be used throughout the book. The notion of entropy, which is fundamental to the whole topic of This short course will try to give a short introduction to polar coding and its main ideas. The course is thought for students who have a rough idea of information theory. As a course notes Chapter 12 from Information Theory (Lecture Notes) will be used. Information theory, in the technical sense, as it is used today goes back to the work of Claude Shannon and was introduced as a means to study and solve problems of communication or transmission of signals over channels. There are two basic problems in information theory that are very easy to explain. Two people, Alice and Bob, want to communicate over a digital channel over some long period of time, and they know the probability that certain messages will be sent ahead of time. For example, English language sentences are more likely than Covers encoding and binary digits, entropy, language and meaning, efficient encoding and the noisy channel, and explores ways in which information theory relates to physics, cybernetics, psychology, and art. the most satisfying discussion to. Originally developed by Claude Shannon in the 1940s, information theory laid the foundations for the digital revolution, and is now an essential tool in telecommunications, genetics, linguistics. Information Theory in the 20th Century. Introduction to channel capacity. A mathematical theory of communication. Obviously, the most important concept of Shannons information theory is information. Although we all seem to have an idea of what information is, its nearly impossible to define it clearly. And, surely enough, the definition given by Shannon seems to come out of nowhere. The amount of information of the introduction and the message. Lecture 1 of the Course on Information Theory, Pattern Recognition, and Neural Networks. Produced by: David MacKay (University of Cambridge) Author: David Ma Information Theory: A Tutorial Introduction James V Stone Psychology Department, University of She eld, England. uk Abstract An introduction to Information Theory and Coding Methods, covering theoretical results and algorithms for compression (source coding) and error correction (c Graduatelevel study for engineering students presents elements of modern probability theory, elements of information theory with emphasis on its basic roots in probability theory and elements of coding theory. Emphasis is on such basic concepts as sets, sample space, random variables, information measure, and capacity. Many reference tables and extensive bibliography. An Introduction to Information Theory: Symbols, Signals and Noise. Pierce writes with an informal, tutorial style of writing, but does not flinch from presenting the fundamental theorems of information theory. This book provides a good balance between words and equations. Information theory has made considerable impact in complex systems, and has in part coevolved with complexity science. Research areas ranging from ecology and biology to aerospace and information technology have all seen benefits from the growth of information theory. Uncommonly goodthe most satisfying discussion to be found. Behind the familiar surfaces of the telephone, radio, and television lies a sophisticated and intriguing body of knowledge known as information theory. This is the theory that has permitted the rapid development of all sorts of communication, from color television to the clear transmission of photographs. Information theory studies the quantification, storage, and communication of information. It was originally proposed by Claude E. Shannon in 1948 to find fundamental limits on signal processing and communication operations such as data compression, An Introduction to Information Theory. An Introduction to Information Theory has 542 ratings and 28 reviews. Evan said: An excellent introduction to the new and complicated science of communic Buy An Introduction to Information Theory, Symbols, Signals and Noise (Dover Books on Mathematics) 2nd Revised edition by John R. Pierce (ISBN: ) from Amazon's Book Store. Everyday low prices and free delivery on eligible orders. An aim of the proposed information systems theory IST) is to build a bridge between the general systems theory's formalism and the world of information and information technologies, dealing with transformation of information as a common nonmaterial substance, whose models in forms of computer algorithms and programs could be implemented to different material objects, including a human's. An introduction to information theory. [Fazlollah M Reza Introduction to Information Theory 1 Claude E. Shannon 2 3 A General Communication System 4 Shannon s Information Theory Conceptualization of information modeling of information sources Sending of information A broad introduction to this field of study This tutorial introduces fundamental concepts in information theory. Information theory has made considerable impact in complex systems, and has in part coevolved with complexity science. Research areas ranging from ecology and biology to aerospace and information technology have all. A series of sixteen lectures covering the core of the book Information Theory, Inference, and Learning Algorithms (Cambridge University Press, 2003) which can be bought at Amazon, and is available free online. A subset of these lectures used to constitute a Part III Physics course at the University of Cambridge. The highresolution videos and all other course material can be downloaded from. Information theory, the mathematical theory of communication, has two primary goals: The rst is the development of the fundamental theoretical lim its on the achievable performance when communicating a given information Francisco J. Escribano Block 2: Introduction to Information Theory April 26, 2015 7 51 Entropy The source entropy is a measurement of its information content, and Introduction to Information Theory and Data Compression, Second Edition is ideally suited for an upperlevel or graduate course for students in mathematics, engineering, and computer science. A Brief Introduction to: Information Theory, Excess Entropy and Computational Mechanics April 1998 (Revised October 2002) David Feldman College of the Atlantic Information Theory A Tutorial Introduction James V Stone Stone Information Theory A Tutorial Introduction Sebtel Press A Tutorial Introduction Book Cover design by. This article helps you understand the basis of information theory, its history as well as its use in machine learning and artificial intelligence..